Beginner's guide to web scraping with python's selenium

- Mar 16, 2021 Selenium can be classified as the automation tool that facilitates scraping of information from the HTML web pages to perform web scraping utilizing google chrome. The scraping on the internet should be performed carefully. It is normally against the terms of the website to scrape out information.

- Scrape off any fat from the surface and discard it. This is very similar to how bone broth is made. Selenium is an important mineral in your body, but deficiency is rare. This article explores.

The web scraping script may access the url directly using HTTP requests or through simulating a web browser. The second approach is exactly how selenium works – it simulates a web browser. The big advantage in simulating the website is that you can have the website fully render – whether it uses javascript or static HTML files. In this web scraping tutorial, we want to use Selenium to navigate to Reddit’s homepage, use the search box to perform a search for a term, and scrape the headings of the results. Reddit utilizes JavaScript for dynamically rendering content, so it’s a good way of demonstrating how to perform web scraping for advanced websites. As with every “web scraping with Selenium” tutorial, you have to download the appropriate driver to interface with the browser you’re going to use for scraping. Since we’re using Chrome, download the driver for the version of Chrome you’re using. The next step is to add it to the system path.

In the first part of this series, we introduced ourselves to the concept of web scraping using two python libraries to achieve this task. Namely, requests and BeautifulSoup. The results were then stored in a JSON file. In this walkthrough, we'll tackle web scraping with a slightly different approach using the selenium python library. We'll then store the results in a CSV file using the pandas library.

The code used in this example is on github.

Why use selenium

Selenium is a framework which is designed to automate test for web applications.You can then write a python script to control the browser interactions automatically such as link clicks and form submissions. However, in addition to all this selenium comes in handy when we want to scrape data from javascript generated content from a webpage. That is when the data shows up after many ajax requests. Nonetheless, both BeautifulSoup and scrapy are perfectly capable of extracting data from a webpage. The choice of library boils down to how the data in that particular webpage is rendered.

Other problems one might encounter while web scraping is the possibility of your IP address being blacklisted. I partnered with scraper API, a startup specializing in strategies that'll ease the worry of your IP address from being blocked while web scraping. They utilize IP rotation so you can avoid detection. Boasting over 20 million IP addresses and unlimited bandwidth.

In addition to this, they provide CAPTCHA handling for you as well as enabling a headless browser so that you'll appear to be a real user and not get detected as a web scraper. For more on its usage, check out my post on web scraping with scrapy. Although you can use it with both BeautifulSoup and selenium.

If you want more info as well as an intro the scrapy library check out my post on the topic.

Using this scraper api link and the codelewis10, you'll get a 10% discount off your first purchase!

For additional resources to understand the selenium library and best practices, this article by towards datascience and accordbox.

Setting up

We'll be using two python libraries. selenium and pandas. To install them simply run pip install selenium pandas

In addition to this, you'll need a browser driver to simulate browser sessions.Since I am on chrome, we'll be using that for the walkthrough.

Driver downloads

- Chrome.

Getting started

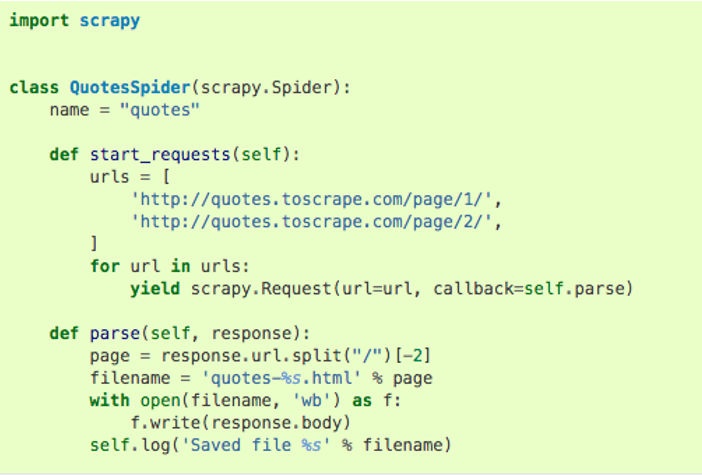

For this example, we'll be extracting data from quotes to scrape which is specifically made to practise web scraping on.We'll then extract all the quotes and their authors and store them in a CSV file.

The code above is an import of the chrome driver and pandas libraries.We then make an instance of chrome by using driver = Chrome(webdriver)Note that the webdriver variable will point to the driver executable we downloaded previously for our browser of choice. If you happen to prefer firefox, import like so

Main script

On close inspection of the sites URL, we'll notice that the pagination URL isHttp://quotes.toscrape.com/js/page/{{current_page_number}}/

where the last part is the current page number. Armed with this information, we can proceed to make a page variable to store the exact number of web pages to scrape data from. In this instance, we'll be extracting data from just 10 web pages in an iterative manner.

The driver.get(url) command makes an HTTP get request to our desired webpage.From here, it's important to know the exact number of items to extract from the webpage.From our previous walkthrough, we defined web scraping as

This is the process of extracting information from a webpage by taking advantage of patterns in the web page's underlying code.

We can use web scraping to gather unstructured data from the internet, process it and store it in a structured format.

On inspecting each quote element, we observe that each quote is enclosed within a div with the class name of quote. By running the directive driver.get_elements_by_class('quote')we get a list of all elements within the page exhibiting this pattern.

Final step

To begin extracting the information from the webpages, we'll take advantage of the aforementioned patterns in the web pages underlying code.

We'll start by iterating over the quote elements, this allows us to go over each quote and extract a specific record.From the picture above we notice that the quote is enclosed within a span of class text and the author within the small tag with a class name of author.

Finally, we store the quote_text and author names variables in a tuple which we proceed to append to the python list by the name total.

Using the pandas library, we'll initiate a dataframe to store all the records(total list) and specify the column names as quote and author.Finally, export the dataframe to a CSV file which we named quoted.csv in this case.

Don't forget to close the chrome driver using driver.close().

Selenium Web Scrape Function

Adittional resources

1. finding elements

You'll notice that I used the find_elements_by_class method in this walkthrough. This is not the only way to find elements. This tutorial by Klaus explains in detail how to use other selectors.

2. Video

If you prefer to learn using videos this series by Lucid programming was very useful to me.https://www.youtube.com/watch?v=zjo9yFHoUl8

3. Best practises while using selenium

4. Toptal's guide to modern web scraping with selenium

And with that, hopefully, you too can make a simple web scraper using selenium 😎.

If you enjoyed this post subscribe to my newsletter to get notified whenever I write new posts.

open to collaboration

I recently made a collaborations page on my website. Have an interesting project in mind or want to fill a part-time role?You can now book a session with me directly from my site.

Selenium Web Scrape Definition

Thanks.

A while back I wrote a post on how to scrape web pages using C# and HtmlAgilityPack (It was in May? So long ago? Wow!). This works fine for static pages, but most web pages are dynamic apps where elements appear and disappear when you interact with the page. So we need a better solution.

Selenium is an open-source application/library that let’s us automate browsing using code. And it is awesome. In this tutorial, I’m going to show how to scrape a simple dynamic web page that changes when an element is clicked. A pre-requisite for this tutorial is having the Chrome browser installed in your computer (more on that later).

Let’s start by creating a new .NET core project:

To use Selenium we need two things: a Selenium WebDriver which interacts with the browser, and the Selenium library which connects our code with the Selenium WebDriver. You can read more in the docs. Gladly, both of them come as NuGet packages that we can add to the solution. We’ll also add a library that provides some Selenium extensions:

One important thing to note when you install these packages is the version of the Selenium.WebDriver.ChromeDriver that is installed, which looks something like this: PackageReference for package 'Selenium.WebDriver.ChromeDriver' version '85.0.4183.8700'. The major version of the driver (in this case 85) must match the major version of the Chrome browser that is installed on your computer (you can check the version you have by going to Help->About in your browser).

To demonstrate the dynamic scraping, I’ve created a web page that has the word “Hello” on it, that when clicked, adds the word “World” below it. I’m not a web developer and don’t pretend to be, so the code here is probably ugly, but it does the job:

I added this page to the project and defined that the page must be copied to the output directory of the project so it can be easily consumed by the scraping code. This is achieved by adding the following lines to the DynamicWebScraping.csproj project file somewhere between the opening and closing Project nodes:

The scraping code will navigate to this page and wait for the heading1 element to appear. When it does it will click on the element and wait for the heading2 element to appear, fetching the textContent that is located in that element:

Let’s build and run the project:

The program opens a browser window and starts to interact with it, returning the text inside the second heading. Pretty cool, right? I have to admit that the first time I running this it feels really powerful, and opens a whole new world of things to build… If only I had more time :-).

As always, the full source code for this tutorial can be found in my GitHub repository.

Hoping that the next post comes sooner. Until next time, happy coding!